This week when came back from office , saw this tweet from Adam Bertram:

It got me curious how to Script this, I had written a Script in past to remove packages from the DP. So let's try out this scenario.

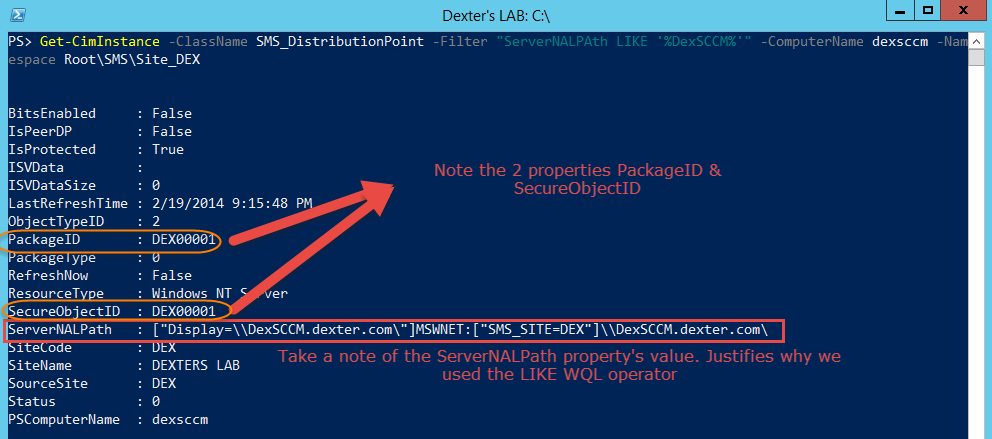

The class to query is the SMS_DistributionPoint class and the property to be used for filtering is ServerNALPath. My Lab has only one DP named "DexSCCM" so gonna use that in the filter. Note the WQL operator used here for filtering is LIKE not '='.

This will give you a bunch of objects back , below is one of the objects screenshot:

Note - Please take a moment to go to the MSDN documentation of the SMS_DistributionPoint WMI Class and pay attention to the ObjectTypeID, SecureObjectID and PackageID properties.

From the above Objects I can get the PackageID or SecureObjectID and get an instance of the appropriate Object based on ObjectTypeID. :)

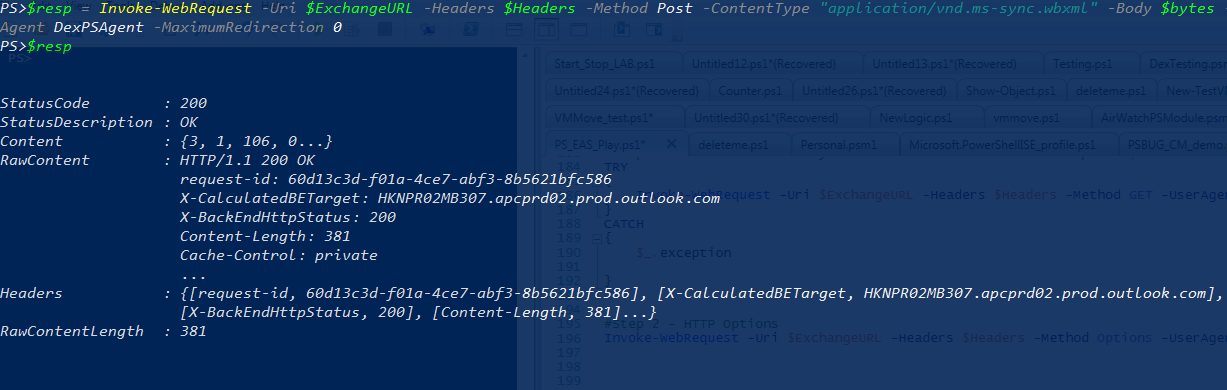

For the above Screenshot the ObjectTypeID is 2 which as per MSDN documentation says it corresponds to a SMS_Package class so let me get the instance of SMS_Package having the PackageID "DEX00001"

It works ! Got the Object back and following this approach can get the Objects stored on the DP.

But there is a small catch when the ObjectTypeID is 31 (SMS_Application) then we can't use the PackageID as a filter with the SMS_Application Class because it is a lazy property. If you don't believe me go ahead and try it (already did). So we have to use another property ModelName to filter.

To assist on this wrote a small Function called Get-DPContent (download it) which allows you to specify a DPName and the ObjectType (application, package, image etc) you want report of being stored in a DP. One can modify the Function to include all details for the Objects but I was just looking for the names of the packages, applications etc. Below is how you use it (once you have it dot sourced)

By default it only looks for Packages

One has to specify the ObjectType in order to look for Applications :

Hope this helps someone, trying to generate a list of Packages on a DP :)

This can be modified to work with SCCM 2007 too but seriously are you still running that :P

Cheers !

Resources:

Technet Gallery: Get list of Packages stored on a DP in ConfigMgr/ SCCM 2012

http://gallery.technet.microsoft.com/Get-list-of-Packages-35208e83/

SMS_Distribution WMI Class

http://msdn.microsoft.com/en-us/library/hh949735.aspx

It got me curious how to Script this, I had written a Script in past to remove packages from the DP. So let's try out this scenario.

The class to query is the SMS_DistributionPoint class and the property to be used for filtering is ServerNALPath. My Lab has only one DP named "DexSCCM" so gonna use that in the filter. Note the WQL operator used here for filtering is LIKE not '='.

001 | Get-CimInstance -ClassName SMS_DistributionPoint -Filter "ServerNALPAth LIKE '%DexSCCM%'" -ComputerName dexsccm -Namespace Root\SMS\Site_DEX |

This will give you a bunch of objects back , below is one of the objects screenshot:

Note - Please take a moment to go to the MSDN documentation of the SMS_DistributionPoint WMI Class and pay attention to the ObjectTypeID, SecureObjectID and PackageID properties.

From the above Objects I can get the PackageID or SecureObjectID and get an instance of the appropriate Object based on ObjectTypeID. :)

For the above Screenshot the ObjectTypeID is 2 which as per MSDN documentation says it corresponds to a SMS_Package class so let me get the instance of SMS_Package having the PackageID "DEX00001"

PS> Get-CimInstance -ClassName SMS_Package -Filter 'PackageID="DEX00001"' -ComputerName dexsccm -Namespace Root\SMS\Site

_DEX

ActionInProgress : 0

AlternateContentProviders :

Description : This package is created during installation.

ExtendedData :

ExtendedDataSize : 0

ForcedDisconnectDelay : 5

ForcedDisconnectEnabled : False

ForcedDisconnectNumRetries : 2

Icon :

IconSize : 0

IgnoreAddressSchedule : False

ISVData :

ISVDataSize : 0

IsVersionCompatible : True

Language :

LastRefreshTime : 2/9/2014 2:23:00 PM

LocalizedCategoryInstanceNames : {}

Manufacturer : Microsoft Corporation

MIFFilename :

MIFName :

MIFPublisher :

MIFVersion :

Name : User State Migration Tool for Windows 8

NumOfPrograms : 0

PackageID : DEX00001

PackageSize : 48381

PackageType : 0

PkgFlags : 0

PkgSourceFlag : 2

PkgSourcePath : C:\Program Files (x86)\Windows Kits\8.1\Assessment and Deployment Kit\User State

Migration Tool

PreferredAddressType :

Priority : 2

RefreshPkgSourceFlag : False

RefreshSchedule :

SecuredScopeNames : {Default}

SedoObjectVersion : FF4B4436-5124-4875-ADB3-168BF444068C

ShareName :

ShareType : 1

SourceDate : 2/9/2014 2:06:15 PM

SourceSite : DEX

SourceVersion : 1

StoredPkgPath :

StoredPkgVersion : 1

Version : 6.3.9431.0

DefaultImageFlags : 2

IsPredefinedPackage : False

TransformAnalysisDate : 1/1/1980 5:30:00 AM

TransformReadiness : 0

PSComputerName : dexsccm

It works ! Got the Object back and following this approach can get the Objects stored on the DP.

But there is a small catch when the ObjectTypeID is 31 (SMS_Application) then we can't use the PackageID as a filter with the SMS_Application Class because it is a lazy property. If you don't believe me go ahead and try it (already did). So we have to use another property ModelName to filter.

To assist on this wrote a small Function called Get-DPContent (download it) which allows you to specify a DPName and the ObjectType (application, package, image etc) you want report of being stored in a DP. One can modify the Function to include all details for the Objects but I was just looking for the names of the packages, applications etc. Below is how you use it (once you have it dot sourced)

PS C:\> get-DPContent -DPname dexsccm

DP ObjectType Package PackageID

-- ---------- ------- ---------

dexsccm Package User State Migration Tool for Windows 8 DEX00001

dexsccm Package Configuration Manager Client Package DEX00002

By default it only looks for Packages

One has to specify the ObjectType in order to look for Applications :

PS> Get-DPContent -DPname dexsccm -ObjectType Application -SCCMServer dexsccm

DP ObjectType Application Description

-- ---------- ----------- -----------

dexsccm Application 7-Zip For 64-bit machines only

dexsccm Application PowerShell Community Exten... PSCX 3.1

dexsccm Application Quest Active Roles Managme... Quest Snapin for AD Admins...

dexsccm Application PowerShell Set PageFile The Script will set the P...

dexsccm Application NotePad++ Alternative to Notepad

Hope this helps someone, trying to generate a list of Packages on a DP :)

This can be modified to work with SCCM 2007 too but seriously are you still running that :P

Cheers !

Resources:

Technet Gallery: Get list of Packages stored on a DP in ConfigMgr/ SCCM 2012

http://gallery.technet.microsoft.com/Get-list-of-Packages-35208e83/

SMS_Distribution WMI Class

http://msdn.microsoft.com/en-us/library/hh949735.aspx