This post is to give you context on a practical use case of using MutEx in PowerShell.

From the MSDN documentation for the MutEx class , MutEx is :

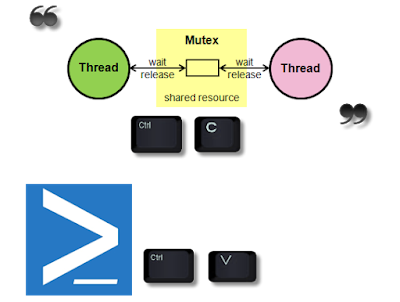

"A synchronization primitive that can also be used for interprocess synchronization."

Mut - Mutually

Ex - Exclusive

![]()

Recently while deploying AzureStack, I saw multiple failed deployments, partly because of me not paying attention.

But since it failed, I had to go and look at the code in an effort to see what went wrong.

AzureStack runs all the deployment tasks for creating a POC by using scheduled tasks (runs in System context) heavily.

Also the status of the deployment is tracked by using XML files (these are placed under C:\ProgramData\Microsoft\AzureStack\), so they have to avoid conflicts in reading and writing of these XML files from these tasks which are separate PowerShell processes.

Now this is a simple but very important part of the whole process. So while going through the code being used,I saw this little neat function in the AzureStakDeploymentStatus.psm1 file :

This function piqued my interest on the subject of MutEx. In the same .psm1 file this function is being used within the functions Get-AzureStackDeploymentStatus and Update-AzureStackDeploymentStatus.

Below is the Get-AzureStackDeploymentStatus defintion :

and the Update-AzureStackDeploymentStatus definition is below:

Now this is powerful stuff and since my first exposure to it, I have been reading up a lot on this topic and how to use this in my Scripts.

This post will soon follow a series of post on this particular topic.

From the MSDN documentation for the MutEx class , MutEx is :

"A synchronization primitive that can also be used for interprocess synchronization."

Mut - Mutually

Ex - Exclusive

Recently while deploying AzureStack, I saw multiple failed deployments, partly because of me not paying attention.

But since it failed, I had to go and look at the code in an effort to see what went wrong.

AzureStack runs all the deployment tasks for creating a POC by using scheduled tasks (runs in System context) heavily.

Also the status of the deployment is tracked by using XML files (these are placed under C:\ProgramData\Microsoft\AzureStack\), so they have to avoid conflicts in reading and writing of these XML files from these tasks which are separate PowerShell processes.

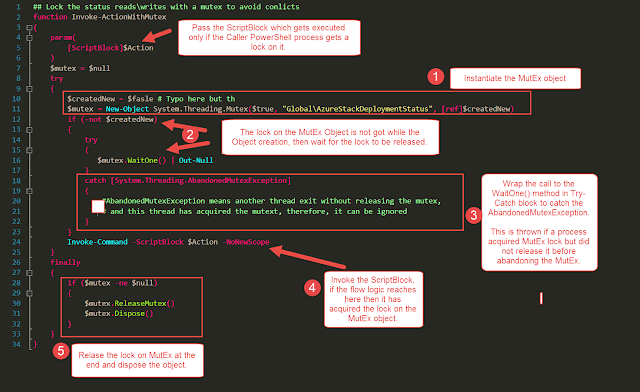

Now this is a simple but very important part of the whole process. So while going through the code being used,I saw this little neat function in the AzureStakDeploymentStatus.psm1 file :

001 002 003 004 005 006 007 008 009 010 011 012 013 014 015 016 017 018 019 020 021 022 023 024 025 026 027 028 029 030 031 032 033 034 035 036 037 038 039 | ## Lock the status reads\writes with a mutex to avoid conlicts function Invoke-ActionWithMutex { param( [ScriptBlock]$Action ) $mutex = $null try { $createdNew = $fasle # Typo here but this evaluates to False $mutex = New-Object System.Threading.Mutex($true, "Global\AzureStackDeploymentStatus", [ref]$createdNew) if (-not $createdNew) { try { $mutex.WaitOne() | Out-Null } catch [System.Threading.AbandonedMutexException] { #AbandonedMutexException means another thread exit without releasing the mutex, and this thread has acquired the mutext, therefore, it can be ignored } } Invoke-Command -ScriptBlock $Action -NoNewScope } finally { if ($mutex -ne $null) { $mutex.ReleaseMutex() $mutex.Dispose() } } } |

This function piqued my interest on the subject of MutEx. In the same .psm1 file this function is being used within the functions Get-AzureStackDeploymentStatus and Update-AzureStackDeploymentStatus.

Below is the Get-AzureStackDeploymentStatus defintion :

001 002 003 004 005 006 007 008 009 010 011 012 | function Get-AzureStackDeploymentStatus { [CmdletBinding()] param() if (-not (Test-Path $statusFilePath)) { Update-AzureStackDeploymentStatus $StatusTemplate | Out-Null } Invoke-ActionWithMutex -Action { [xml](Get-Content $statusFilePath) } } |

and the Update-AzureStackDeploymentStatus definition is below:

001 002 003 004 005 006 007 008 009 010 011 012 013 014 015 | function Update-AzureStackDeploymentStatus { [CmdletBinding()] param( [Xml]$Status ) Invoke-ActionWithMutex -Action { if (-not (Test-Path $statusFileFolder)) { New-Item -Path $statusFileFolder -ItemType Directory | Out-Null } $Status.Save($statusFilePath) } } |

Now this is powerful stuff and since my first exposure to it, I have been reading up a lot on this topic and how to use this in my Scripts.

This post will soon follow a series of post on this particular topic.